As a teacher, it can be difficult to determine if your classroom’s test results are an accurate representation of whether your students have mastered core standards and learning objectives. When most of your students miss a question, is that a sign that you failed to properly deliver the material? Or was there simply something wrong with how the question or the potential answers were phrased?

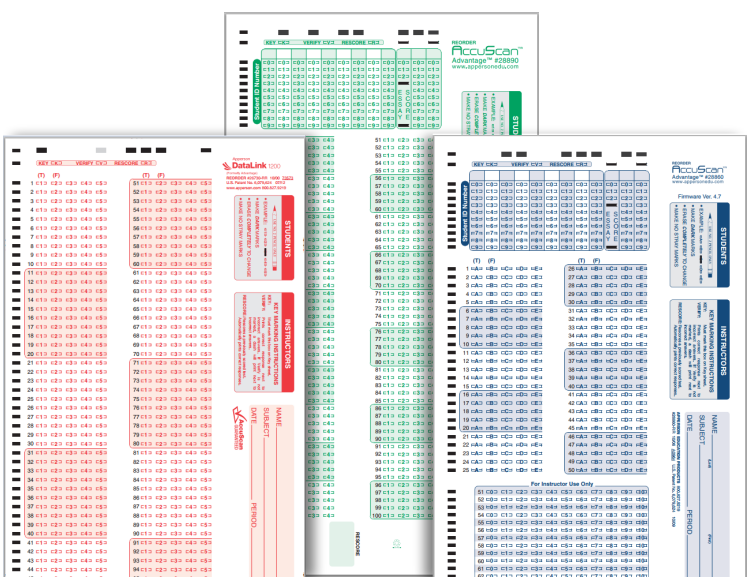

Beyond the common metrics like class averages, there are other indicators which can provide you with more meaningful insight into your students’ understanding of standards as well as your own performance as an instructor and a test creator. If you’re not getting the results you’re expecting, these measures can help you improve your question items before your next examination. By utilizing a data scoring and reporting software, such as the DataLink Connect, you can easily evaluate important metrics such as classroom performance by core standard, as well as the quality of specific test items through individual item analysis.

So how exactly do you determine if your tests are fulfilling their purpose? What should you be looking for?

1. Individual Item Analysis by Difficulty

A well-balanced assessment will contain questions of varying difficulty levels. With DataLink Connect’s Exam Item Analysis, you can access a detailed report showing the percentage of incorrect answers for each question. If your success rate on a particular item is too low, you may need to reword the question for clarity, revisit the information with your students before the next examination, or devote more time to the subject in your curriculum moving forward. If the success rate on an item is higher than expected, you may need to increase the difficulty of the question or write more likely alternative answers.

2. Item Analysis by Standard

This measure will provide you with clarity on what subjects need to be more deeply reviewed in your classroom by grouping analyses of each test item by user-identified learning objectives and standards. Through the DataLink Connect’s Class Proficiency Item Analysis, the correct answer is highlighted and the overall proficiency associated with each standard is displayed. Users can upload their custom learning standards into the software, while provincial core standards are already included for the convenience of our Canadian instructors.

3. Item Discrimination

Otherwise known as item differentiation, the item effect or the point-biserial correlation, this metric refers to the relationship between how well students did on an individual exam item and how well they did on the test overall. If lower-scoring students are performing better on a particular item than your higher-scoring students, it most likely indicates that success on this test item is not correlated to mastery of the material. This data can be reviewed in the DataLink Connect’s Exam Item Analysis through the Point-Biserial Correlation Indicator associated with each item - the higher the value, the more discriminating the question. Items with scores near or less than zero should be analyzed and potentially removed from the final results.

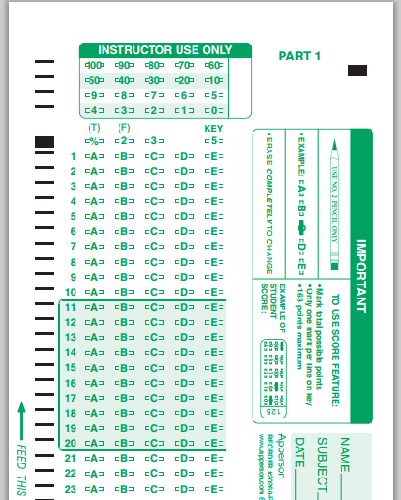

4. Distractor Efficiency

Distractors are the alternatives to the correct solution of a multiple choice question. The quality of your distractors and how they are written are just as important as those of your correct answers. The DataLink Connect’s Exam Item Analysis can show you how frequently students select each option. Distractors that were too rarely chosen by students should be removed as they were deemed inherently implausible and therefore not real alternatives. Distractors that were selected too often, especially by high performers, should be reviewed to make sure they are not confusing or just as plausible as your correct answer.

5. Classroom Performance by Standard

By knowing which standards or learning objectives your students struggled with the most, you can better plan for the rest of the semester and make the most out of reviews or tutorials before a midterm or final exam. The DataLink Connect’s Class Proficiency Report will allow you to determine which core standards you need to revisit. More specifically, it will provide you with a list of students that did not meet the criteria to be considered proficient in each standard.

6. Internal Consistency

The reliability of a test will be at its highest when scores are based solely on a student’s mastery of the material, rather than on luck or other external factors. One way to measure the reliability of your assessment is through internal consistency - whether items that are supposed to measure similar standards produce similar scores. Internal consistency can be determined through the Class Proficiency Item Analysis, which provides you with a summary of how well your students performed on each standard or learning objective.

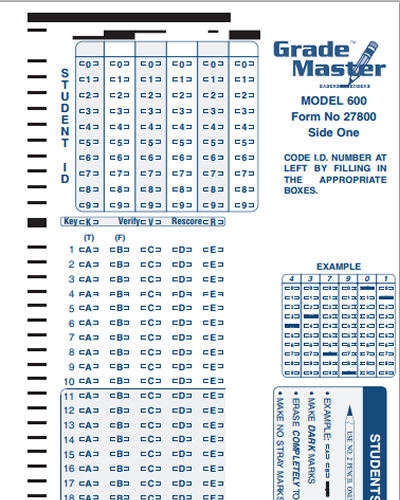

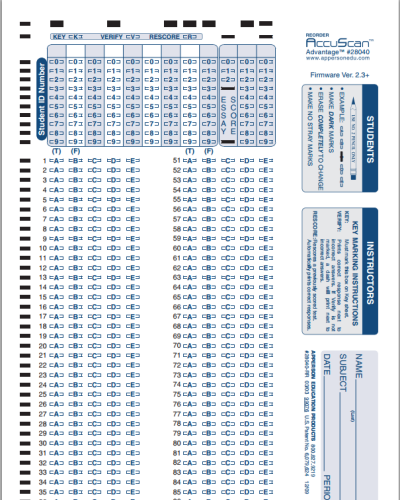

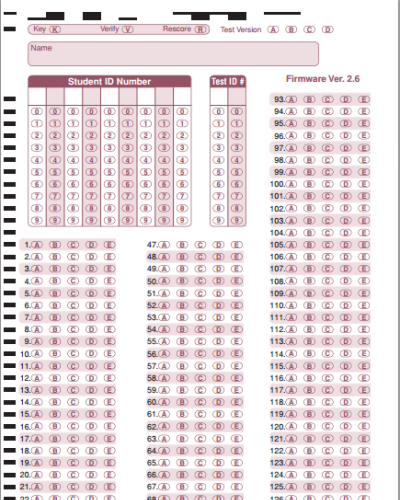

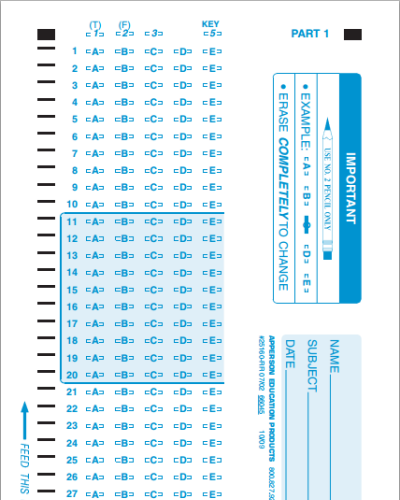

If you would like to learn more about how you can harness the DataLink Connect software to enhance the analysis of your multiple choice assessments, check out the short video below. The DataLink Connect software is included for free with every DataLink scanner and is compatible with 25 popular gradebooks and institutional databases.

Stay up to date with our news and promotions: